Archive for the ‘AI’ Category

TED Talks Physician Scientist Dr. Eric Topol – can AI catch what doctors miss

Samstag, August 24th, 2024Ollama & Open WebUI – the free Large Language Model (LLM) ‚phi3.5‘ from Microsoft overtakes other AI models

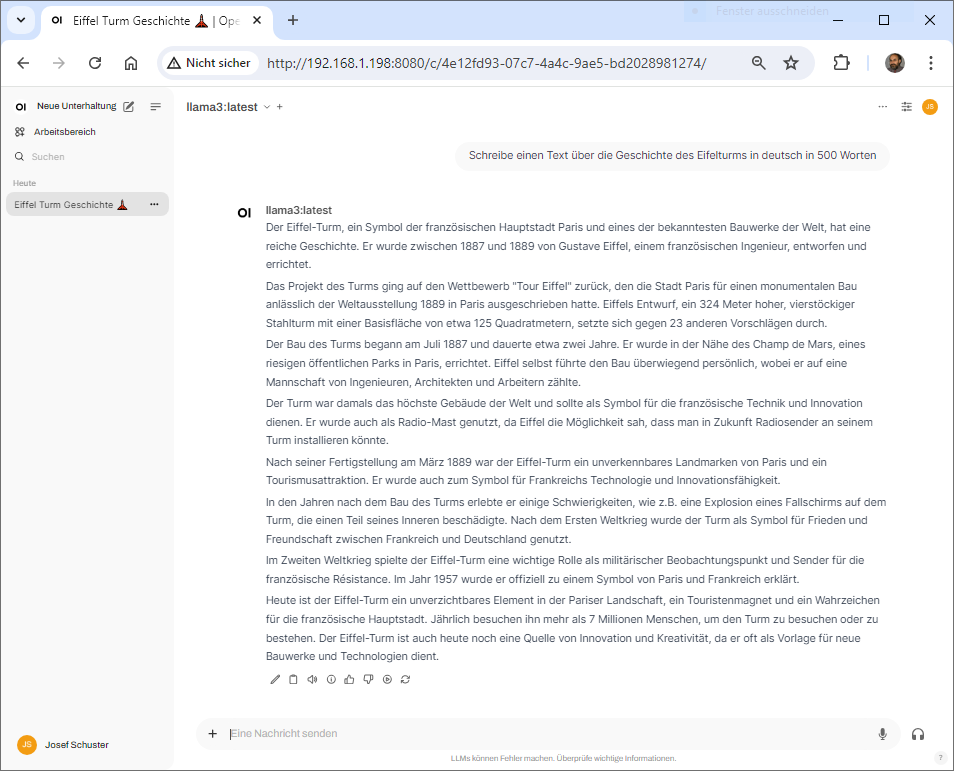

Mittwoch, August 21st, 2024root@pve-ai-llm-12:~# docker exec -it ollama ollama run phi3.5

Ollama & AnythingLLM – chatten sie privat mit ihren Dokumenten

Mittwoch, August 14th, 2024HPE ProLiant DL384 Gen12 with NVIDIA GH200 NVL2 – this next generation 2P server provides next level performance for enterprise AI enabling a new era of AI e.g. Ollama & Open WebUI

Donnerstag, August 8th, 2024LlamaCards – is a web frontend that provides a dynamic interface for interacting with Large Language Models (LLMs) in real-time using Ollama as the backend

Mittwoch, August 7th, 2024Microsoft Windows 11 – install WSL2 with NVIDIA CUDA 11.8

Dienstag, August 6th, 2024Google Research Med-PaLM 2 – is a large language model (LLM) designed to provide high quality answers to medical questions

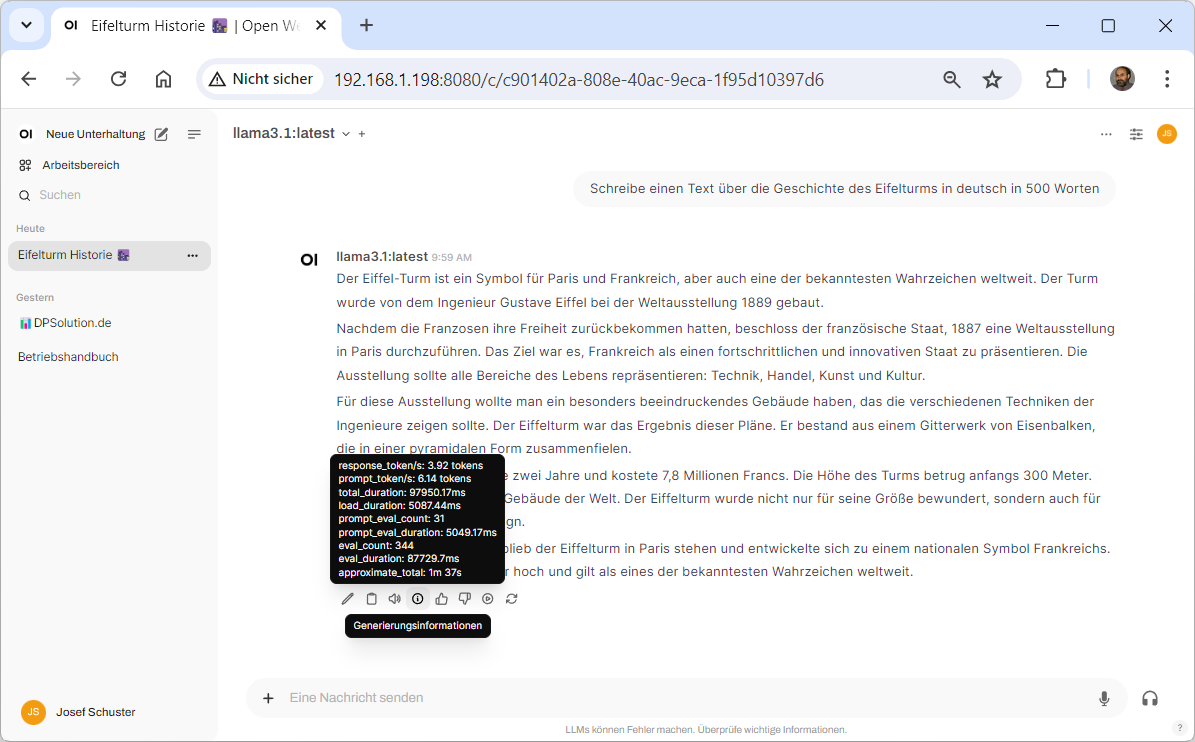

Dienstag, August 6th, 2024Ollama & Open WebUI – Llama 3.1 is a new state-of-the-art model from Meta AI available in 8B

Mittwoch, Juli 24th, 2024

root@pve-ai-llm-11:~# docker exec -it ollama ollama pull llama3.1

pulling manifest

pulling 87048bcd5521… 100% ▕███████████████████████████████████████████▏ 4.7 GB

pulling 11ce4ee3e170… 100% ▕█████████████████████████████████████████████▏ 1.7 KB

pulling f1cd752815fc… 100% ▕█████████████████████████████████████████████▏ 12 KB

pulling 56bb8bd477a5… 100% ▕███████████████████████████████████████████▏ 96 B

pulling e711233e7343… 100% ▕████████████████████████████████████████████▏ 485 B

verifying sha256 digest

writing manifest

removing any unused layers

success

root@pve-ai-llm-11:~#

Mozilla Innovation Project ‚llamafile‘ – bringing LLMs to the people and to your own computer

Sonntag, Juli 21st, 2024Mozilla Innovation Project ‚llamafile‘ – an open source initiative that collapses all the complexity of a full-stack LLM chatbot down to a single file that runs on six operating systems

Ollama & Open WebUI – how to customize a Large Language Model (LLM)

Donnerstag, Juli 18th, 2024root@pve-ai-llm-11:~# docker exec -it ollama ollama list

NAME ID SIZE MODIFIED

openhermes:latest 95477a2659b7 4.1 GB 5 days ago

llama3:latest 365c0bd3c000 4.7 GB 7 days ago

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# docker exec -it ollama ollama show llama3 –modelfile

# Modelfile generated by „ollama show“

# To build a new Modelfile based on this, replace FROM with:

# FROM llama3:latest

FROM /root/.ollama/models/blobs/sha256-6a0746a1ec1aef3e7ec53868f220ff6e389f6f8ef87a01d77c96807de94ca2aa

TEMPLATE „{{ if .System }}<|start_header_id|>system<|end_header_id|>

{{ .System }}<|eot_id|>{{ end }}{{ if .Prompt }}<|start_header_id|>user<|end_header_id|>

{{ .Prompt }}<|eot_id|>{{ end }}<|start_header_id|>assistant<|end_header_id|>

{{ .Response }}<|eot_id|>“

PARAMETER num_keep 24

PARAMETER stop <|start_header_id|>

PARAMETER stop <|end_header_id|>

PARAMETER stop <|eot_id|>

LICENSE „META LLAMA 3 COMMUNITY LICENSE AGREEMENT

root@pve-ai-llm-11:~#

ollama show phi –modelfile > new.modelfile

ollama create new-phi –file new.modelfile

Google NotebookLM – is your personalized AI research assistant powered by Google’s most capable model Gemini 1.5 Pro

Mittwoch, Juli 17th, 2024 Google NotebookLM – use the power of AI for quick summarization and note taking

Google NotebookLM – use the power of AI for quick summarization and note taking

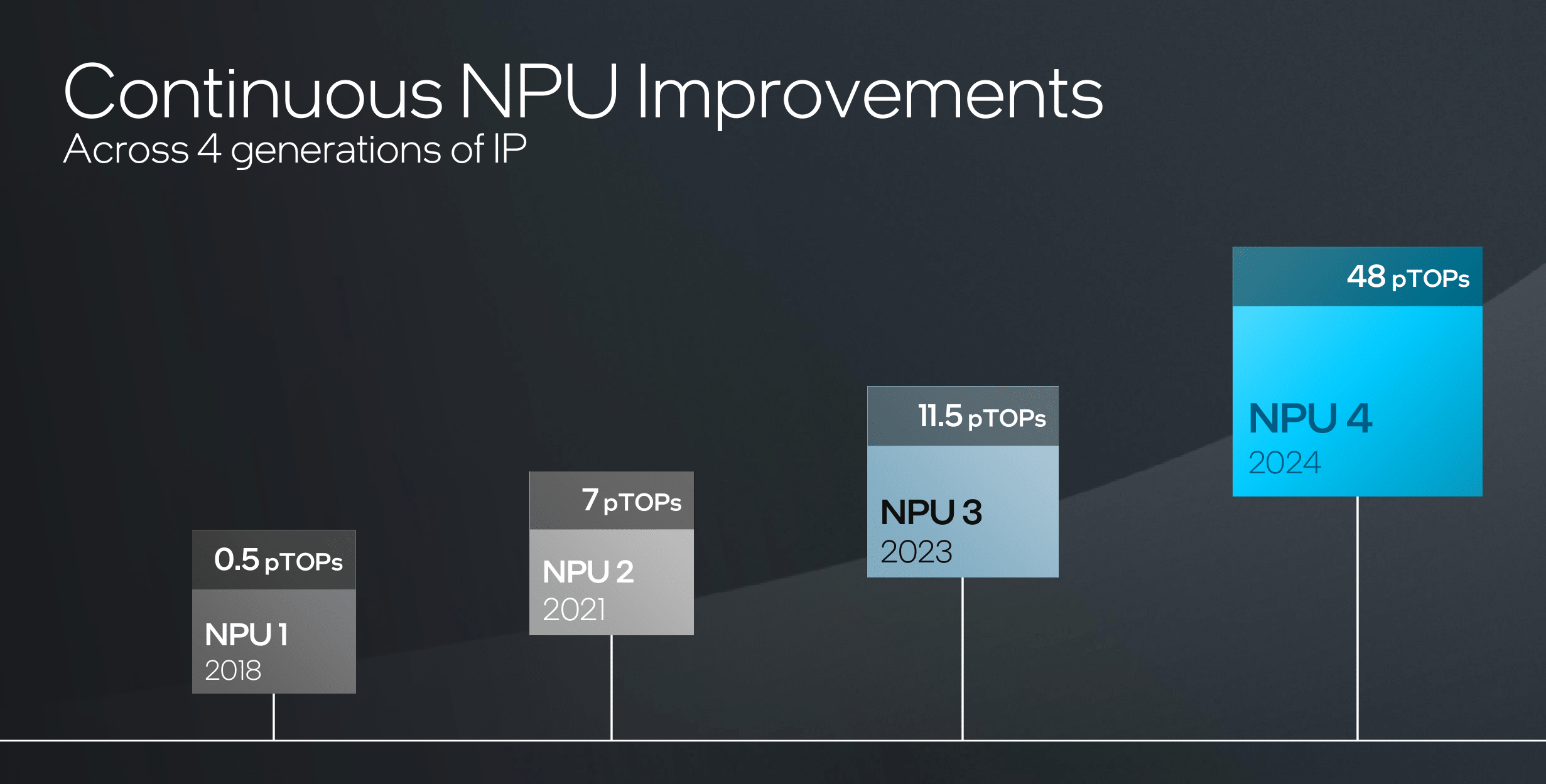

TOPS – quantifies an NPU’s processing capabilities by measuring the number of operations (additions, multiplies, etc.) in trillions executed within a second

Dienstag, Juli 16th, 2024TOPS = 2 × MAC unit count × Frequency / 1 Trillion

Multiply Accumulate (MAC) operation executes the mathematical formulas at the core of AI workloads

Frequency dictates the clock speed (or cycles per second) at which an NPU and its MAC units (as well as a CPU or GPU) operate directly influencing overall Performance

Precision refers to the granularity of calculations with higher precision typically correlating with increased model accuracy at the expense of computational intensity

Ollama & Open WebUI – now available as an official Docker image

Donnerstag, Juli 11th, 2024## Install Docker ##

root@pve-ai-llm-11:~# apt-get install curl

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# curl -fsSL https://get.docker.com -o get-docker.sh

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# ls -la

total 48

drwx—— 4 root root 4096 Jul 30 09:32 .

drwxr-xr-x 21 root root 4096 Jul 30 09:23 ..

-rw-r–r– 1 root root 3106 Apr 22 13:04 .bashrc

drwx—— 2 root root 4096 Jul 30 09:23 .cache

-rw-r–r– 1 root root 161 Apr 22 13:04 .profile

drwx—— 2 root root 4096 May 7 09:12 .ssh

-rw-r–r– 1 root root 21582 Jul 30 09:32 get-docker.sh

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# chmod +x get-docker.sh

root@pve-ai-llm-11:~# ls -la

total 48

drwx—— 4 root root 4096 Jul 30 09:32 .

drwxr-xr-x 21 root root 4096 Jul 30 09:23 ..

-rw-r–r– 1 root root 3106 Apr 22 13:04 .bashrc

drwx—— 2 root root 4096 Jul 30 09:23 .cache

-rw-r–r– 1 root root 161 Apr 22 13:04 .profile

drwx—— 2 root root 4096 May 7 09:12 .ssh

-rwxr-xr-x 1 root root 21582 Jul 30 09:32 get-docker.sh

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# ./get-docker.sh

# Executing docker install script, commit: 1ce4e39c9502b89728cdd4790a8c3895709e358d

+ sh -c apt-get update -qq >/dev/null

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq ca-certificates curl >/dev/null

+ sh -c install -m 0755 -d /etc/apt/keyrings

+ sh -c curl -fsSL „https://download.docker.com/linux/ubuntu/gpg“ -o /etc/apt/keyrings/docker.asc

+ sh -c chmod a+r /etc/apt/keyrings/docker.asc

+ sh -c echo „deb [arch=amd64 signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu noble stable“ > /etc/apt/sources.list.d/docker.list

+ sh -c apt-get update -qq >/dev/null

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq docker-ce docker-ce-cli containerd.io docker-compose-plugin docker-ce-rootless-extras docker-buildx-plugin >/dev/null

+ sh -c docker version

Client: Docker Engine – Community

Version: 27.1.1

API version: 1.46

Go version: go1.21.12

Git commit: 6312585

Built: Tue Jul 23 19:57:14 2024

OS/Arch: linux/amd64

Context: default

Server: Docker Engine – Community

Engine:

Version: 27.1.1

API version: 1.46 (minimum version 1.24)

Go version: go1.21.12

Git commit: cc13f95

Built: Tue Jul 23 19:57:14 2024

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.7.19

GitCommit: 2bf793ef6dc9a18e00cb12efb64355c2c9d5eb41

runc:

Version: 1.7.19

GitCommit: v1.1.13-0-g58aa920

docker-init:

Version: 0.19.0

GitCommit: de40ad0

================================================================================

To run Docker as a non-privileged user, consider setting up the

Docker daemon in rootless mode for your user:

dockerd-rootless-setuptool.sh install

Visit https://docs.docker.com/go/rootless/ to learn about rootless mode.

To run the Docker daemon as a fully privileged service, but granting non-root

users access, refer to https://docs.docker.com/go/daemon-access/

WARNING: Access to the remote API on a privileged Docker daemon is equivalent

to root access on the host. Refer to the ‚Docker daemon attack surface‘

documentation for details: https://docs.docker.com/go/attack-surface/

================================================================================

root@pve-ai-llm-11:~#

## with a CPU only ##

root@pve-ai-llm-11:~# docker run -d -v ollama:/root/.ollama -p 11434:11434 –name ollama –restart always ollama/ollama

Unable to find image ‚ollama/ollama:latest‘ locally

latest: Pulling from ollama/ollama

7646c8da3324: Pull complete

128e3f309605: Pull complete

44384cad8fa3: Pull complete

Digest: sha256:35f2654eaa3897bd6045afc2b06b4ac00c64de9f41dc9f6a8d9f51c02cfd6d30

Status: Downloaded newer image for ollama/ollama:latest

a2e7dd96f5ba6d95f249704bb68c866fa356414a99a19e09bdbe5b3f07ab04c9

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# docker run hello-world

Unable to find image ‚hello-world:latest‘ locally

latest: Pulling from library/hello-world

c1ec31eb5944: Pull complete

Digest: sha256:1408fec50309afee38f3535383f5b09419e6dc0925bc69891e79d84cc4cdcec6

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the „hello-world“ image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

root@pve-ai-llm-11:~#

## Run a model ##

root@pve-ai-llm-11:~# docker exec -it ollama ollama run llama3

pulling manifest

pulling 6a0746a1ec1a… 100% █████████████████████████████████████████████▏ 4.7 GB

pulling 4fa551d4f938… 100% █████████████████████████████████████████████▏ 12 KB

pulling 8ab4849b038c… 100% ████████████████████████████████████████████▏ 254 B

pulling 577073ffcc6c… 100% ▕█████████████████████████████████████████████▏ 110 B

pulling 3f8eb4da87fa… 100% ▕████████████████████████████████████████████▏ 485 B

verifying sha256 digest

writing manifest

removing any unused layers

success

>>> help

I’d be happy to help you with whatever you need. Please let me know what’s on your mind and how I can assist you.

Do you have a specific question or topic in mind, or are you just looking for some general guidance?

>>> /bye

root@pve-ai-llm-11:~#

## Open WebUI Container erstellen ##

root@pve-ai-llm-11:~# docker run -d –network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 –name open-webui –restart always ghcr.io/open-webui/open-webui:main

Unable to find image ‚ghcr.io/open-webui/open-webui:main‘ locally

main: Pulling from open-webui/open-webui

f11c1adaa26e: Pull complete

4ad0c7422f5c: Pull complete

f2bf536a1e4f: Pull complete

3bdbfec22900: Pull complete

83396b6ad4cc: Pull complete

82b2e523b77f: Pull complete

4f4fb700ef54: Pull complete

dc24a9093de1: Pull complete

dd27fb166be3: Pull complete

958fcb957c53: Pull complete

5b9147962751: Pull complete

ad63e135fcf4: Pull complete

9648b911f4a0: Pull complete

daf0cd29e6e0: Pull complete

edec677f39e7: Pull complete

aac4b2ca7a13: Pull complete

Digest: sha256:f53d1dbd8d9bd6a5297ba5efc63618df750b1dc0b5a8b0c1e5600380808eaf73

Status: Downloaded newer image for ghcr.io/open-webui/open-webui:main

25e84cfbcecaf360f900078401905241d45c18858011da84f07776ee196f4b90

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# docker stats

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

89bdc73699ba open-webui 0.15% 686MiB / 12GiB 5.58% 0B / 0B 1.63GB / 81.9kB 12

d84632f5a629 ollama 0.00% 602.3MiB / 12GiB 4.90% 1.87kB / 822B 2.32GB / 1.24GB 10

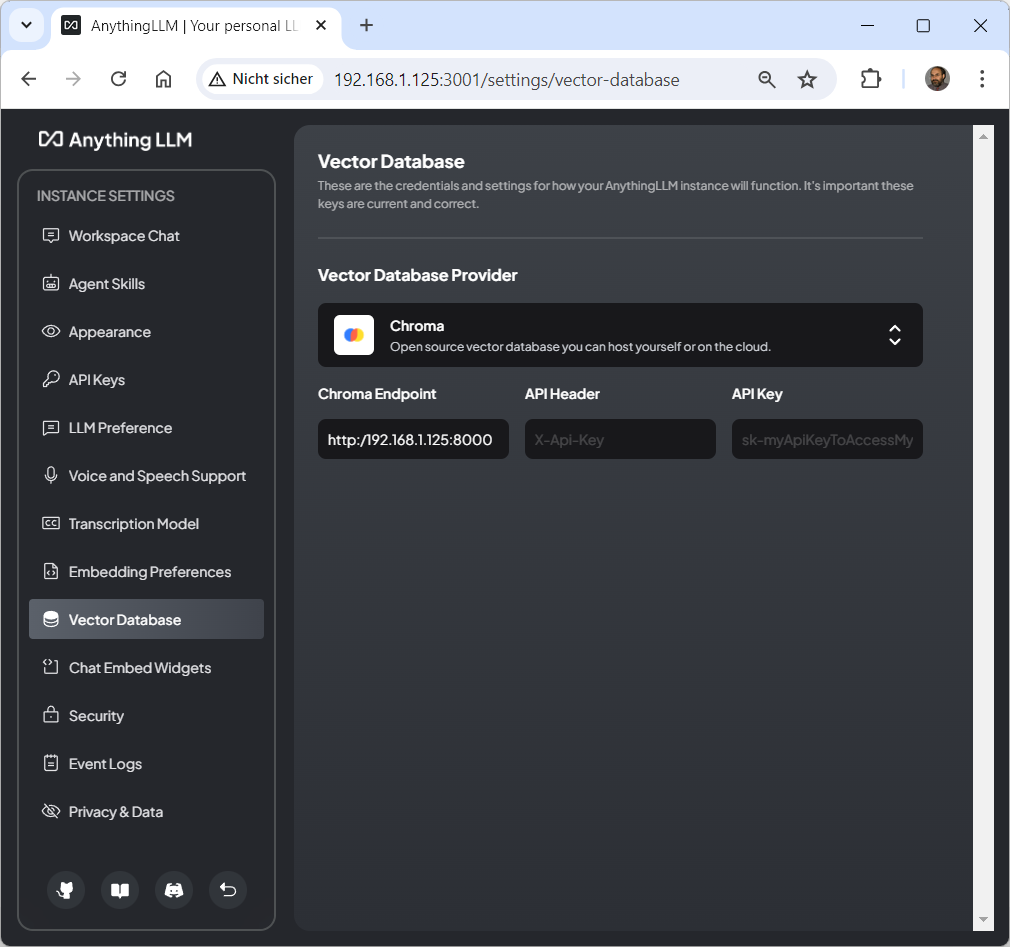

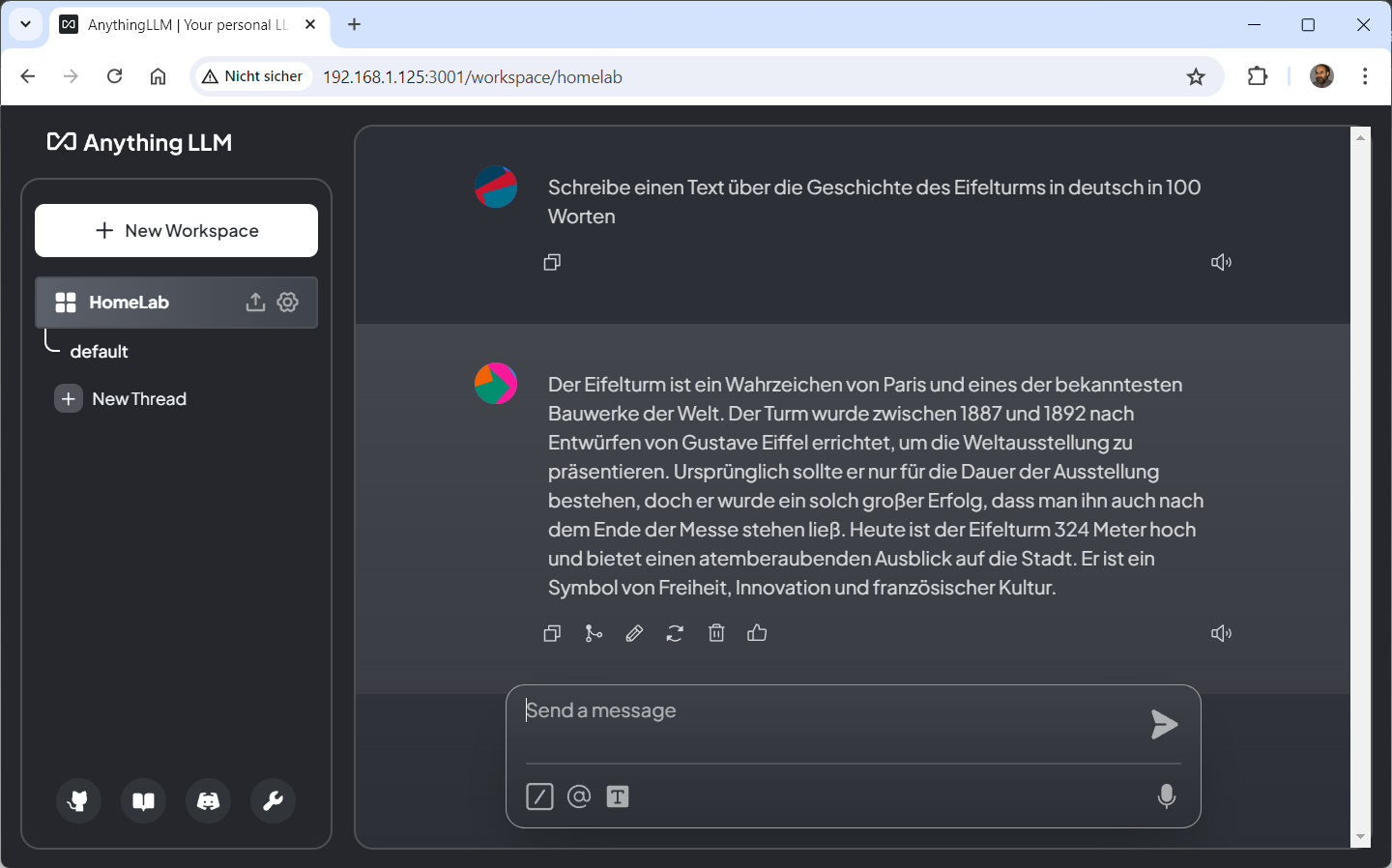

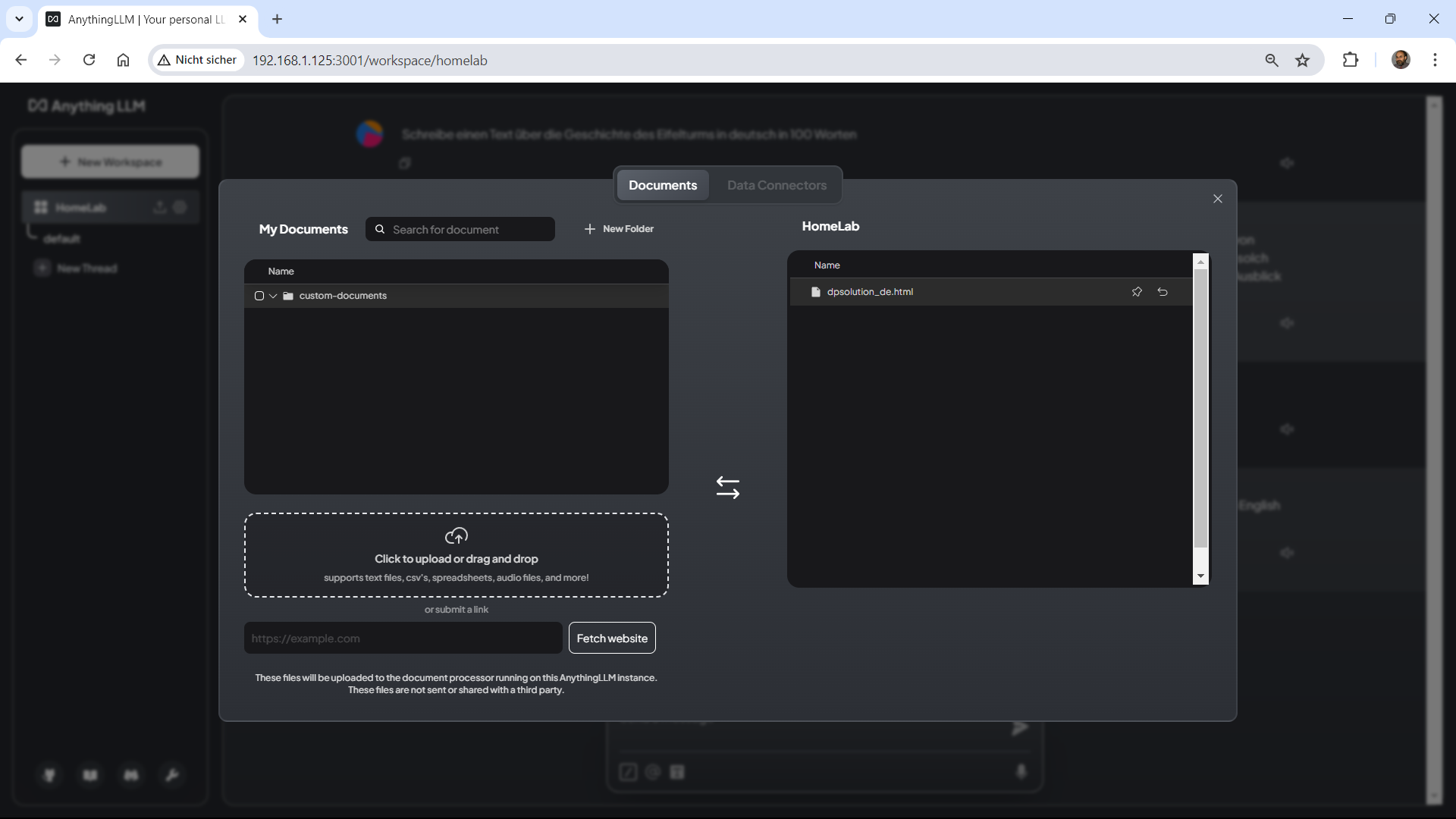

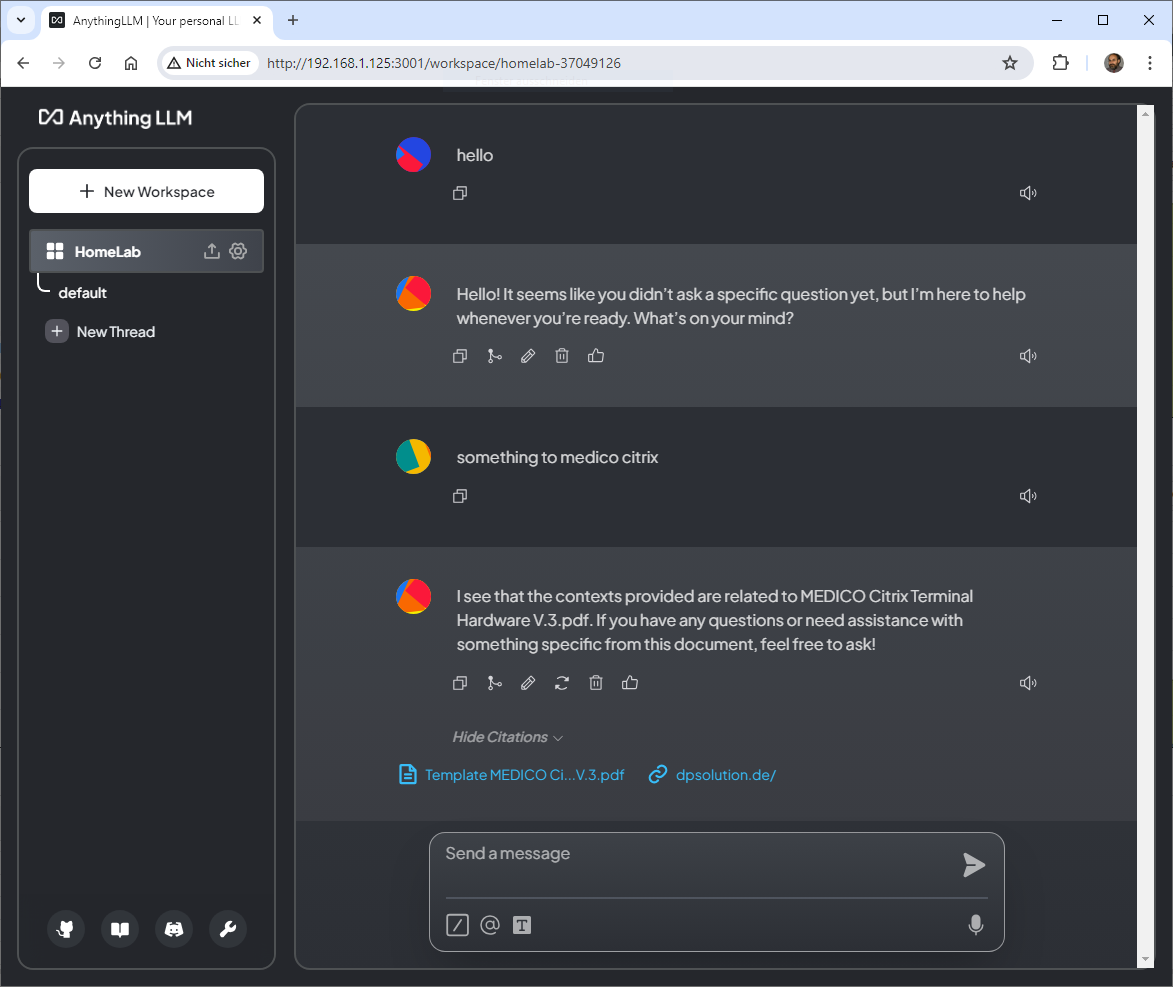

Proxmox Virtual Environment (VE) 8.2.4 – how to use your first local ‚Meta Llama 3‘ Large Language Model (LLM) project with Open WebUI and now with AnythingLLM (with a Chroma Vector Database) used as a Retrieval Augmented Generation (RAG) system

Sonntag, Juli 7th, 2024

root@pve-ai-llm-02:~# git clone https://github.com/chroma-core/chroma && cd chroma

Cloning into ‚chroma’…

remote: Enumerating objects: 39779, done.

remote: Counting objects: 100% (7798/7798), done.

remote: Compressing objects: 100% (1362/1362), done.

remote: Total 39779 (delta 6967), reused 6802 (delta 6356), pack-reused 31981

Receiving objects: 100% (39779/39779), 320.34 MiB | 11.29 MiB/s, done.

Resolving deltas: 100% (25736/25736), done.

root@pve-ai-llm-02:~/chroma#

root@pve-ai-llm-02:~/chroma# docker compose up -d –build

WARN[0000] The „CHROMA_SERVER_AUTHN_PROVIDER“ variable is not set. Defaulting to a blank string.

WARN[0000] The „CHROMA_SERVER_AUTHN_CREDENTIALS_FILE“ variable is not set. Defaulting to a blank string.

WARN[0000] The „CHROMA_SERVER_AUTHN_CREDENTIALS“ variable is not set. Defaulting to a blank string.

WARN[0000] The „CHROMA_AUTH_TOKEN_TRANSPORT_HEADER“ variable is not set. Defaulting to a blank string.

WARN[0000] The „CHROMA_OTEL_EXPORTER_ENDPOINT“ variable is not set. Defaulting to a blank string.

WARN[0000] The „CHROMA_OTEL_EXPORTER_HEADERS“ variable is not set. Defaulting to a blank string.

WARN[0000] The „CHROMA_OTEL_SERVICE_NAME“ variable is not set. Defaulting to a blank string.

WARN[0000] The „CHROMA_OTEL_GRANULARITY“ variable is not set. Defaulting to a blank string.

WARN[0000] The „CHROMA_SERVER_NOFILE“ variable is not set. Defaulting to a blank string.

WARN[0000] /root/chroma/docker-compose.yml: `version` is obsolete

[+] Building 100.1s (17/17) FINISHED

=> [server internal] load build definition from Dockerfile

=> => transferring dockerfile: 1.32kB

=> [server internal] load metadata for docker.io/library/python:3.11-slim-bookworm

=> [server internal] load .dockerignore

=> => transferring context: 131B

=> [server builder 1/6] FROM docker.io/library/python:3.11-slim-bookworm@sha256:aad3c9cb248194ddd1b98860c2bf41ea7239c384ed51829cf38dcb3569deb7f1

=> => resolve docker.io/library/python:3.11-slim-bookworm@sha256:aad3c9cb248194ddd1b98860c2bf41ea7239c384ed51829cf38dcb3569deb7f1

=> => sha256:642b83290b5254bbe4bf72ee85b86b3496689d263e237b379039bced52fe358d 1.94kB / 1.94kB

=> => sha256:c8413a70b2b7bf9cc5c0d240b06d5bc61add901ecaf2d5621dbce4bcb18875d0 6.89kB / 6.89kB

=> => sha256:f11c1adaa26e078479ccdd45312ea3b88476441b91be0ec898a7e07bfd05badc 29.13MB / 29.13MB

=> => sha256:c1ffa773372df0248c21b3d0965cc0197074d66e5ca8d6e23d6fcdd43a39ab45 3.51MB / 3.51MB

=> => sha256:bb03a6d9f5bc4d62b6c0fe02b885a4bdf44b5661ff5d3a3112bac4f16c8e0fe4 12.87MB / 12.87MB

=> => sha256:aad3c9cb248194ddd1b98860c2bf41ea7239c384ed51829cf38dcb3569deb7f1 9.12kB / 9.12kB

=> => sha256:3012e1cab3ddadfb1f5886d260c06da74fc1cb0bf8ca660ec2306ac9ce87fc8c 231B / 231B

=> => sha256:293c7f22380c8fd647d1dc801d163d33cf597052de2b5b0e13b72a1843b9c0cc 3.21MB / 3.21MB

=> => extracting sha256:f11c1adaa26e078479ccdd45312ea3b88476441b91be0ec898a7e07bfd05badc

=> => extracting sha256:c1ffa773372df0248c21b3d0965cc0197074d66e5ca8d6e23d6fcdd43a39ab45

=> => extracting sha256:bb03a6d9f5bc4d62b6c0fe02b885a4bdf44b5661ff5d3a3112bac4f16c8e0fe4

=> => extracting sha256:3012e1cab3ddadfb1f5886d260c06da74fc1cb0bf8ca660ec2306ac9ce87fc8c

=> => extracting sha256:293c7f22380c8fd647d1dc801d163d33cf597052de2b5b0e13b72a1843b9c0cc

=> [server internal] load build context

=> => transferring context: 29.42MB

=> [server final 2/7] RUN mkdir /chroma

=> [server builder 2/6] RUN apt-get update –fix-missing && apt-get install -y –fix-missing build-essential gcc g++ cmake autoconf && r

=> [server final 3/7] WORKDIR /chroma

=> [server builder 3/6] WORKDIR /install

=> [server builder 4/6] COPY ./requirements.txt requirements.txt

=> [server builder 5/6] RUN pip install –no-cache-dir –upgrade –prefix=“/install“ -r requirements.txt

=> [server builder 6/6] RUN if [ „$REBUILD_HNSWLIB“ = „true“ ]; then pip install –no-binary :all: –force-reinstall –no-cache-dir –prefix=“/install“ chroma-h

=> [server final 4/7] COPY –from=builder /install /usr/local

=> [server final 5/7] COPY ./bin/docker_entrypoint.sh /docker_entrypoint.sh

=> [server final 6/7] COPY ./ /chroma

=> [server final 7/7] RUN apt-get update –fix-missing && apt-get install -y curl && chmod +x /docker_entrypoint.sh && rm -rf /var/lib/apt/lists/*

=> [server] exporting to image

=> => exporting layers

=> => writing image sha256:eee7257aeb16c8cb97561de427f1f7265f37e7f706f066cc0147f775abc68d15

=> => naming to docker.io/library/server

[+] Running 3/3

✔ Network chroma_net Created

✔ Volume „chroma_chroma-data“ Created

✔ Container chroma-server-1 Started

root@pve-ai-llm-02:~#

## AnythingLLM WEB Desktop for Linux aufrufen ##