Archive for Juli 11th, 2024

Anstrengender Alltag als Roboterprogrammierer – was bekommt man dafür € 2.565,- netto

Donnerstag, Juli 11th, 2024Red Bull – across a 3.6 kilometer line suspended above the Messina Straits between mainland Italy and Sicily

Donnerstag, Juli 11th, 2024Samsung Galaxy Ring – its lightweight titanium frame makes the ring comfortable to wear all day

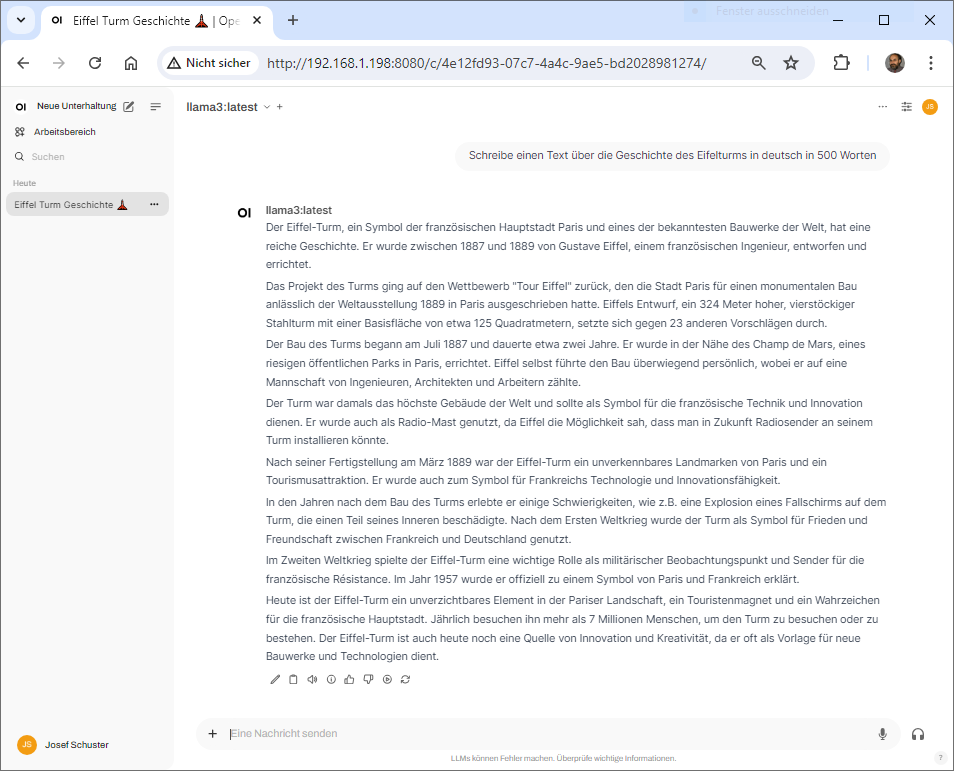

Donnerstag, Juli 11th, 2024Ollama & Open WebUI – now available as an official Docker image

Donnerstag, Juli 11th, 2024## Install Docker ##

root@pve-ai-llm-11:~# apt-get install curl

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# curl -fsSL https://get.docker.com -o get-docker.sh

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# ls -la

total 48

drwx—— 4 root root 4096 Jul 30 09:32 .

drwxr-xr-x 21 root root 4096 Jul 30 09:23 ..

-rw-r–r– 1 root root 3106 Apr 22 13:04 .bashrc

drwx—— 2 root root 4096 Jul 30 09:23 .cache

-rw-r–r– 1 root root 161 Apr 22 13:04 .profile

drwx—— 2 root root 4096 May 7 09:12 .ssh

-rw-r–r– 1 root root 21582 Jul 30 09:32 get-docker.sh

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# chmod +x get-docker.sh

root@pve-ai-llm-11:~# ls -la

total 48

drwx—— 4 root root 4096 Jul 30 09:32 .

drwxr-xr-x 21 root root 4096 Jul 30 09:23 ..

-rw-r–r– 1 root root 3106 Apr 22 13:04 .bashrc

drwx—— 2 root root 4096 Jul 30 09:23 .cache

-rw-r–r– 1 root root 161 Apr 22 13:04 .profile

drwx—— 2 root root 4096 May 7 09:12 .ssh

-rwxr-xr-x 1 root root 21582 Jul 30 09:32 get-docker.sh

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# ./get-docker.sh

# Executing docker install script, commit: 1ce4e39c9502b89728cdd4790a8c3895709e358d

+ sh -c apt-get update -qq >/dev/null

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq ca-certificates curl >/dev/null

+ sh -c install -m 0755 -d /etc/apt/keyrings

+ sh -c curl -fsSL „https://download.docker.com/linux/ubuntu/gpg“ -o /etc/apt/keyrings/docker.asc

+ sh -c chmod a+r /etc/apt/keyrings/docker.asc

+ sh -c echo „deb [arch=amd64 signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu noble stable“ > /etc/apt/sources.list.d/docker.list

+ sh -c apt-get update -qq >/dev/null

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq docker-ce docker-ce-cli containerd.io docker-compose-plugin docker-ce-rootless-extras docker-buildx-plugin >/dev/null

+ sh -c docker version

Client: Docker Engine – Community

Version: 27.1.1

API version: 1.46

Go version: go1.21.12

Git commit: 6312585

Built: Tue Jul 23 19:57:14 2024

OS/Arch: linux/amd64

Context: default

Server: Docker Engine – Community

Engine:

Version: 27.1.1

API version: 1.46 (minimum version 1.24)

Go version: go1.21.12

Git commit: cc13f95

Built: Tue Jul 23 19:57:14 2024

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.7.19

GitCommit: 2bf793ef6dc9a18e00cb12efb64355c2c9d5eb41

runc:

Version: 1.7.19

GitCommit: v1.1.13-0-g58aa920

docker-init:

Version: 0.19.0

GitCommit: de40ad0

================================================================================

To run Docker as a non-privileged user, consider setting up the

Docker daemon in rootless mode for your user:

dockerd-rootless-setuptool.sh install

Visit https://docs.docker.com/go/rootless/ to learn about rootless mode.

To run the Docker daemon as a fully privileged service, but granting non-root

users access, refer to https://docs.docker.com/go/daemon-access/

WARNING: Access to the remote API on a privileged Docker daemon is equivalent

to root access on the host. Refer to the ‚Docker daemon attack surface‘

documentation for details: https://docs.docker.com/go/attack-surface/

================================================================================

root@pve-ai-llm-11:~#

## with a CPU only ##

root@pve-ai-llm-11:~# docker run -d -v ollama:/root/.ollama -p 11434:11434 –name ollama –restart always ollama/ollama

Unable to find image ‚ollama/ollama:latest‘ locally

latest: Pulling from ollama/ollama

7646c8da3324: Pull complete

128e3f309605: Pull complete

44384cad8fa3: Pull complete

Digest: sha256:35f2654eaa3897bd6045afc2b06b4ac00c64de9f41dc9f6a8d9f51c02cfd6d30

Status: Downloaded newer image for ollama/ollama:latest

a2e7dd96f5ba6d95f249704bb68c866fa356414a99a19e09bdbe5b3f07ab04c9

root@pve-ai-llm-11:~#

root@pve-ai-llm-11:~# docker run hello-world

Unable to find image ‚hello-world:latest‘ locally

latest: Pulling from library/hello-world

c1ec31eb5944: Pull complete

Digest: sha256:1408fec50309afee38f3535383f5b09419e6dc0925bc69891e79d84cc4cdcec6

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the „hello-world“ image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

root@pve-ai-llm-11:~#

## Run a model ##

root@pve-ai-llm-11:~# docker exec -it ollama ollama run llama3

pulling manifest

pulling 6a0746a1ec1a… 100% █████████████████████████████████████████████▏ 4.7 GB

pulling 4fa551d4f938… 100% █████████████████████████████████████████████▏ 12 KB

pulling 8ab4849b038c… 100% ████████████████████████████████████████████▏ 254 B

pulling 577073ffcc6c… 100% ▕█████████████████████████████████████████████▏ 110 B

pulling 3f8eb4da87fa… 100% ▕████████████████████████████████████████████▏ 485 B

verifying sha256 digest

writing manifest

removing any unused layers

success

>>> help

I’d be happy to help you with whatever you need. Please let me know what’s on your mind and how I can assist you.

Do you have a specific question or topic in mind, or are you just looking for some general guidance?

>>> /bye

root@pve-ai-llm-11:~#

## Open WebUI Container erstellen ##

root@pve-ai-llm-11:~# docker run -d –network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 –name open-webui –restart always ghcr.io/open-webui/open-webui:main

Unable to find image ‚ghcr.io/open-webui/open-webui:main‘ locally

main: Pulling from open-webui/open-webui

f11c1adaa26e: Pull complete

4ad0c7422f5c: Pull complete

f2bf536a1e4f: Pull complete

3bdbfec22900: Pull complete

83396b6ad4cc: Pull complete

82b2e523b77f: Pull complete

4f4fb700ef54: Pull complete

dc24a9093de1: Pull complete

dd27fb166be3: Pull complete

958fcb957c53: Pull complete

5b9147962751: Pull complete

ad63e135fcf4: Pull complete

9648b911f4a0: Pull complete

daf0cd29e6e0: Pull complete

edec677f39e7: Pull complete

aac4b2ca7a13: Pull complete

Digest: sha256:f53d1dbd8d9bd6a5297ba5efc63618df750b1dc0b5a8b0c1e5600380808eaf73

Status: Downloaded newer image for ghcr.io/open-webui/open-webui:main

25e84cfbcecaf360f900078401905241d45c18858011da84f07776ee196f4b90

root@pve-ai-llm-11:~#